Introduction

Prompt engineering is essentially the art of crafting instructions for AI models, particularly large language models (LLMs). And Prompt Engineering evolves around the concept of discovering and designing prompts that reliably yield useful or desired outcomes.

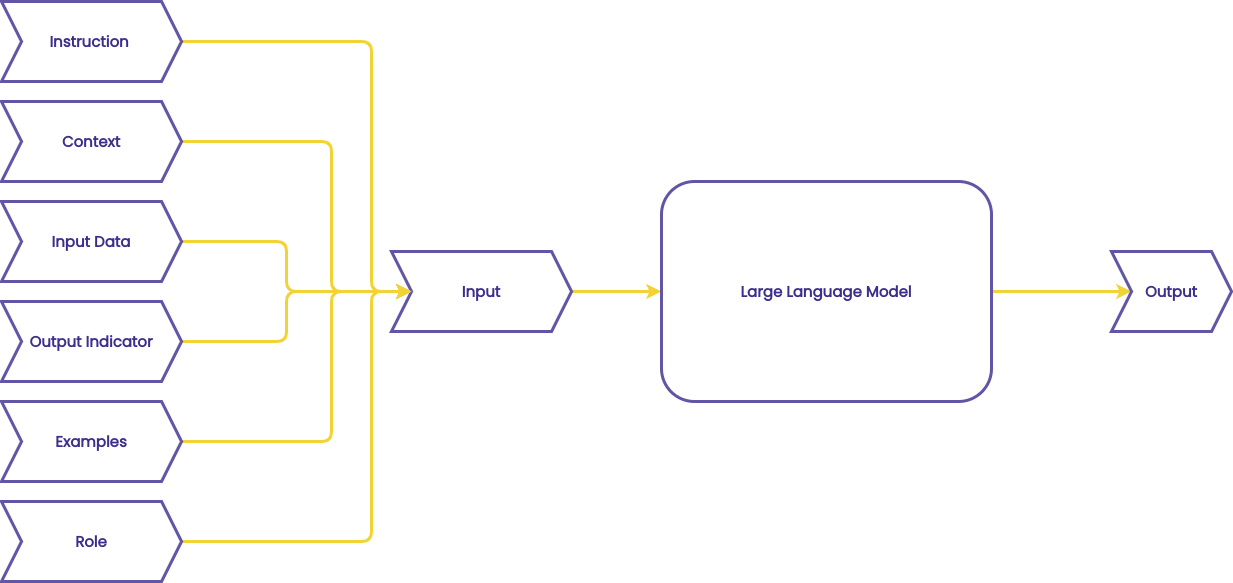

Key Elements of an Effective Prompt

Understanding what constitutes a well-structured prompt is fundamental. A prompt usually encompasses the following elements, tailored to the specific task at hand:

- Instruction/Task: This is the core of the prompt, clearly outlining what you want the AI to do. It should be specific and actionable, like "write a poem" or "translate this text to Spanish."

- Context: Providing background information or additional details can help the AI better understand the situation.

- Input Data: The specific information or data you wish to receive a response for.

- Output Indicator: This specifies the desired output type or format, guiding the model on how to structure its response.

- Examples: Including a few examples of the desired output can serve as a reference for the AI, guiding it towards the kind of response you expect.

- Role: In some cases, specifying the role you want the AI to play can influence its response style and tone.

Each element plays a pivotal role in crafting a prompt that communicates effectively with the AI, ensuring that the output aligns closely with your expectations.

Strategies for Prompt Construction

To optimize interaction with AI models, adopting a methodical approach to prompt construction can significantly enhance the quality of the results. Here's a breakdown:

- Clarity and Conciseness: Aim for simplicity, ensuring your prompt is easily understandable.

- Rich Contextualization: Supply the model with sufficient context to ground its responses.

- Directive Use: Describe the desired output style in detail, leveraging specific directives or referencing relevant personas when necessary.

- Explicit Output Requests: Clearly articulate the type of output you're seeking. Formulating your prompt as a question, starting with who, what, where, when, why, and how, can be particularly effective.

- Incorporate Examples: Providing an example response can guide the model in understanding the expected format or content of its output.

- Break Down Complex Tasks: Providing an example response can guide the model in understanding the expected format or content of its output.

- Experiment and Iterate: The process of prompt engineering is iterative. Experimentation and subsequent refinement based on outcomes is key to mastering effective prompt crafting.

Prompt Engineering Techniques

Prompt engineering is not a one-size-fits-all approach. Depending on the task and the level of familiarity of the LLM with the task, different techniques can be applied

Zero-shot Prompting

Zero-shot prompting is a way to instruct large language models (LLMs) to perform tasks they haven't been specifically trained on before.The expectation is for the model to tackle the assignment based solely on its pre-existing knowledge. Modern LLMs usually exhibit impressive zero-shot capabilities.

Few-shot Prompting

Here, you provide the model with a few examples of both the task and the desired output. This essentially enables the model to "learn" from these examples, improving its ability to generate closely aligned responses by understanding the context better.

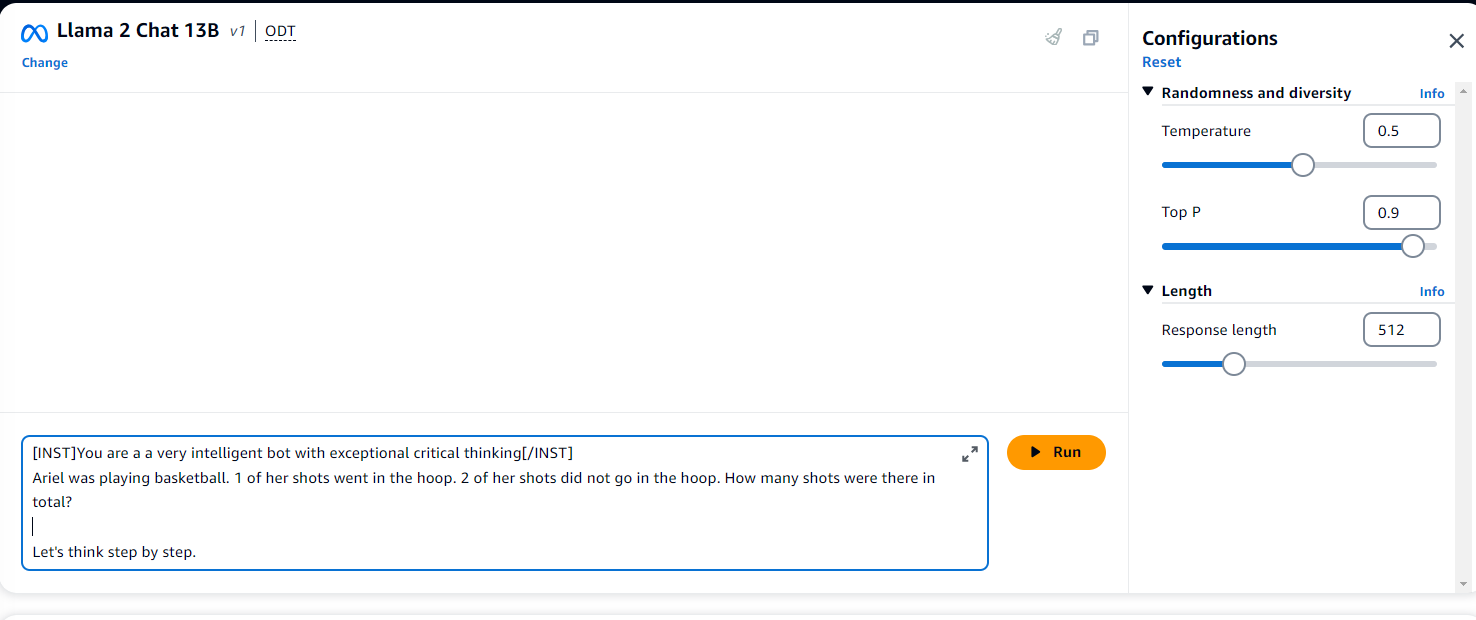

Chain-of-Thought (CoT) Prompting

This method is especially useful for complex reasoning tasks. By breaking down the task into intermediary steps, CoT prompting promotes a step-by-step problem-solving approach. Employing either zero-shot or few-shot techniques in conjunction with CoT can yield nuanced and logically coherent outputs. Encouraging the model to "Think step by step" can activate this mode of reasoning.

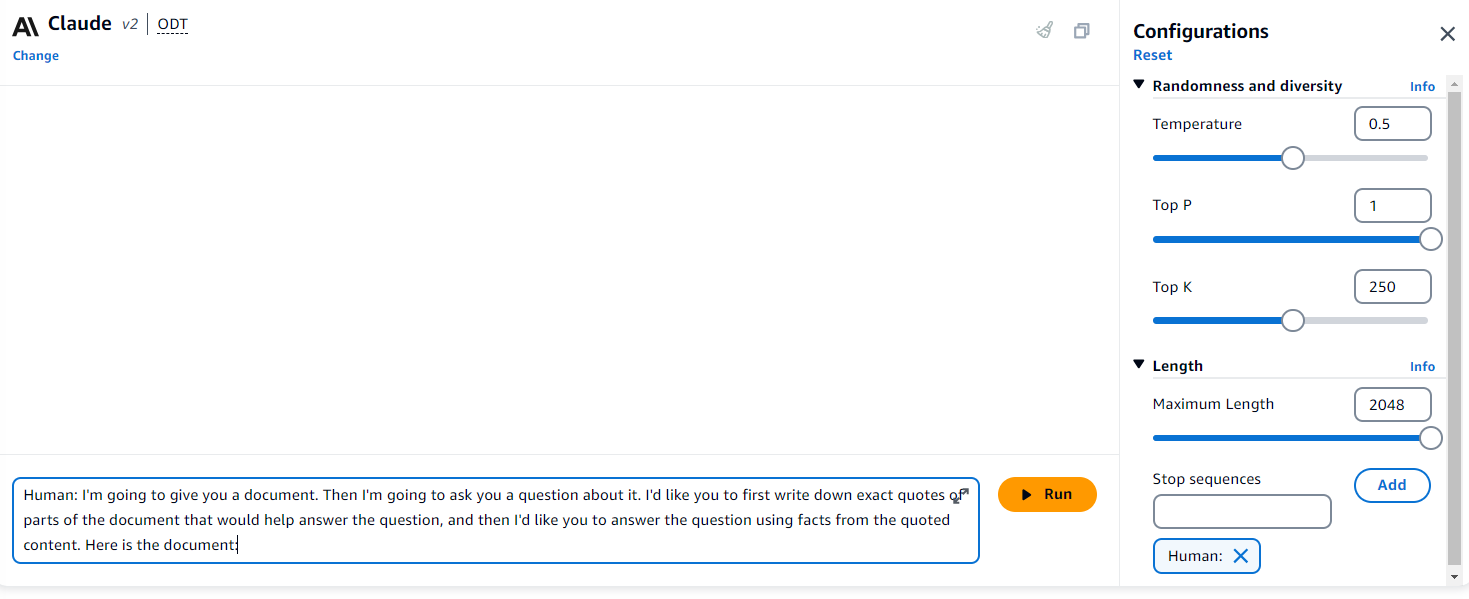

Tuning Prompt Parameters for Precise Results

The outcome of a prompt is not solely reliant on its content but also on certain parameters that govern the model's response behavior. Understanding and adjusting these parameters allows for finer control over the results:

Temperature

This parameter controls the randomness in the model's responses. Lower values lead to more predictable, factual responses, while higher values introduce creativity and diversity.

Top_p (Nucleus Sampling)

Adjusting this defines how deterministic the responses are. Lower values yield more precise answers, whereas higher values allow for a broader range of responses.

Top_k

This dictates the number of high-probability vocabulary tokens the model considers. Setting this parameter helps control the balance between creativity and relevance.

Token Counts

Parameters like MinTokens and MaxTokenCount define the bounds of the model's output, ensuring responses are neither too brief nor excessively long. Additionally, StopSequences can signal the model to end a response, and numResults can dictate the number of generated responses for comparative analysis.

Various LLM Examples

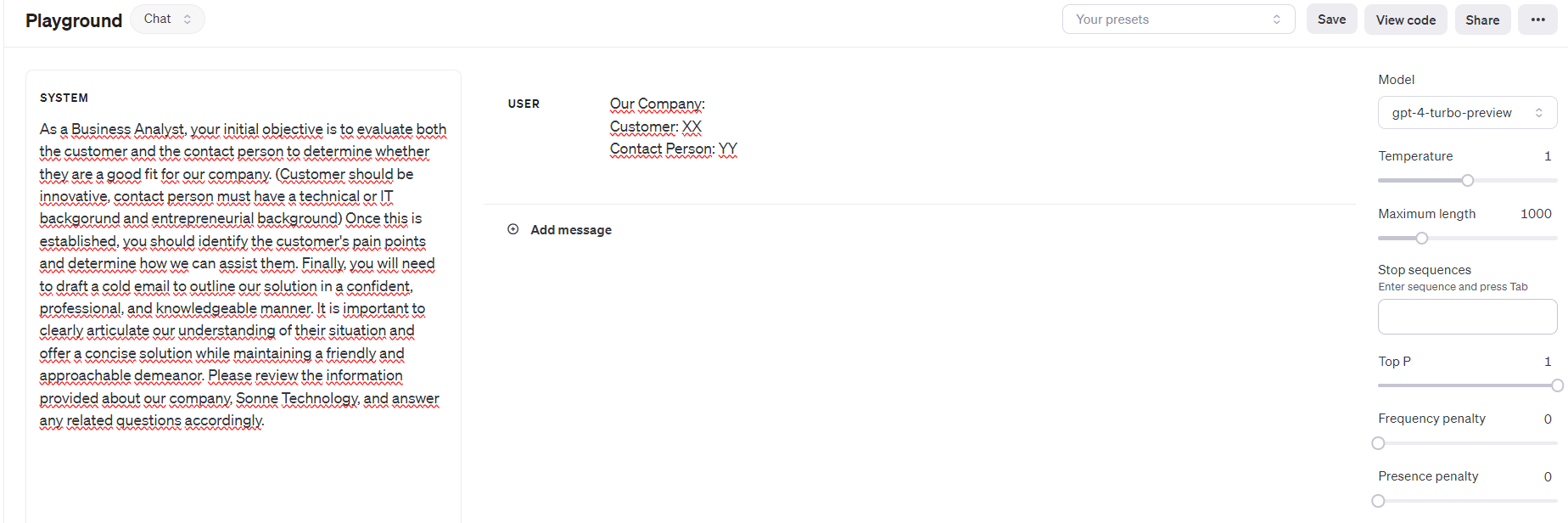

GPT-4-Turbo Detailed Analysis

LLaMa 2 13B Chain of Thought

Anthropic Claude v2 Grounding Content

Mastering the Art of Prompt Engineering

Prompt Engineering stands as a pivotal skill in the era of AI-driven innovation. By mastering this craft, technical professionals can unlock the full potential of language models, transforming AI from a mere tool into a collaborative partner capable of driving significant advancements. Whether it's through refining the elements of your prompts, experimenting with different prompting techniques, or finely tuning model parameters, each step you take enhances your ability to interact more meaningfully with AI. The journey of Prompt Engineering is continuous and ever-evolving; embarking on it not only amplifies your technical prowess but also paves the way for groundbreaking discoveries and solutions in your field.

In conclusion, Prompt Engineering emerges not just as a technical capability but as a vital language of collaboration between humans and AI. As we stand on the brink of a new era where AI's role becomes increasingly integral across sectors, the mastery of prompt engineering offers a powerful toolset for harnessing its potential.